Cropping my webcam with ffmpeg

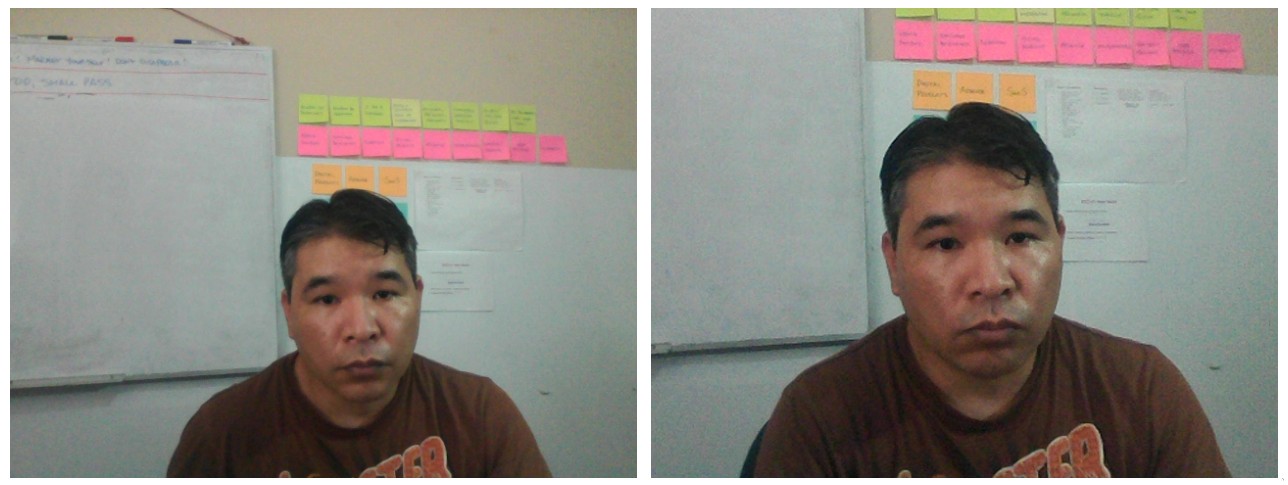

My laptop is on a riser, at a healthy distance and height from me. But when I do video calls, you see a lot of my surroundings (which aren’t great) and little of me. I sometimes tilt the screen to be vertically centered.

Since I’ve learnt ffmpeg is designed for real-time processing, I decided this would be a good problem to solve and learn with!

I created a loopback video4linux device, then run ffmpeg to feed my built-in laptop webcam’s stream into it, crop it, sharpen it and add a tiny bit of color saturation. It looks pretty good! Not Macbook Pro-webcam-good, but good!

Here’s the original webcam output and the final ffmpeg result, side by side:

Here’s my ffmpeg command, broken up for readability:

ffmpeg -f v4l2 \

-framerate 30 \

-video_size 640x480 \

-input_format mjpeg \

-i /dev/video1 \

-vcodec rawvideo \

-pix_fmt yuv420p \

-filter:v "crop=494:370:103:111,scale=640:480,setsar=1,unsharp,eq=saturation=1.2" \

-f v4l2 \

/dev/video3

The magic happens on -filter:v. If you break it by commas you’ll see the cropping, scaling it back to 640×480, (un)sharpening and color saturation.

I never took the proper time to learn ffmpeg and, while the learning curve is a bit high, it makes perfect sense, it’s very well designed.