Something I don’t like of Obsidian‘s Wiki links syntax is that the link is first, and the label second. So:

[[Link|Display Text]]

This breaks the reading flow, because if I write:

Whatever happened [[Daily Notes/Daily Notes 2023-11-01|the other day]]

You inevitably read «Whatever happened [[Daily Notes…» and then see the | character and then I realize, «oh, the text meant to be read was «the other day,» that makes sense now.»

This is no problem while working in Obsidian, because Obsidian will display the link as an HTML link, you read «Whatever happened the other day» and you won’t see the link. That’s great, but I spend most of my time in Vim/Neovim. I would have rather preferred if Obsidian used the other Wiki link format, which is «label first, link second.» Like this:

Whatever happened [[the other day|Daily Notes/Daily Notes 2023-11-01]]

It’s more natural to me. You read «Whatever happened [[the other day» and when you see the |character, you know it’s linking somewhere else. Reading flow doesn’t break.

Then I remembered Vim’s conceal feature. By setting up the proper syntax highlighting rules, Vim can hide the link and show only the label, just like Obsidian!

I thought there might be no need to do this from scratch, maybe there’s a syntax file that, at the very least, parses the link and label. I can go from there. I looked at Wiki.vim’s own syntax highlighting but they don’t parse the text and link part. Same with a Mediawiki syntax file for Vim. Pandoc’s syntax file for Vim doesn’t either.

Change of plans, I had to do it from scratch. I would had to learn two things: how to create new syntax highlighting rules and how Vim’s regular expressions work.

And so I did. I learnt on every little pocket of time I had, meaning, when I was sick with tummy ache at 2 AM, when singing to the kids to sleep, whenever Thais was busy baking, etc. I devoured tutorials and the Vim manual. By the way, Neovim has a better, navigable, manual.

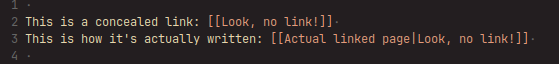

This is how it looks like:

It works awesome and I feel awesome! I feel like I own Vim at a new level. I’m now thinking on how to leverage this new skill and also the fact that I’m no longer at the whim of a colorscheme being almost perfect if only the Comments color were a bit darker. Now I can tweak it to my liking. And even make some new rules or copy over stuff between syntax files.

And Vim is so powerful! Everything is so very well thought out. It has the experience of years, decades of work. So the syntax highlighting can handle many things and already has solutions for things you might stumble because, in these past decades, many people stumbled with it already and it was solved. Elegantly.